Assessing the Assessments

Addressing questions about Ohio’s AIR exams

Beachwood students completed computerized end-of-course exams last month.

The exams, designed by the American Institutes of Research (AIR), assess student skills across grade levels. In high school, AIR tests are administered in English 1 and 2, Biology, American History, American Government, Algebra and Geometry.

Max Xu, Assistant Director of Assessment at the Ohio Dept. of Education, explained that the tests are designed to make sure students learn the state-recommended curriculum.

Since the 2013 implementation of the rigorous Common Core standards, Ohio and many other states have increased the rigor of year-end assessments.

In the past few years, Ohio state tests have transitioned from the Ohio Graduation Tests (OGTs) to the end-of-course exams designed by the Partnership for Assessment of Readiness for College and Careers (PARCC) to current assessments designed by the American Institutes of Research (AIR).

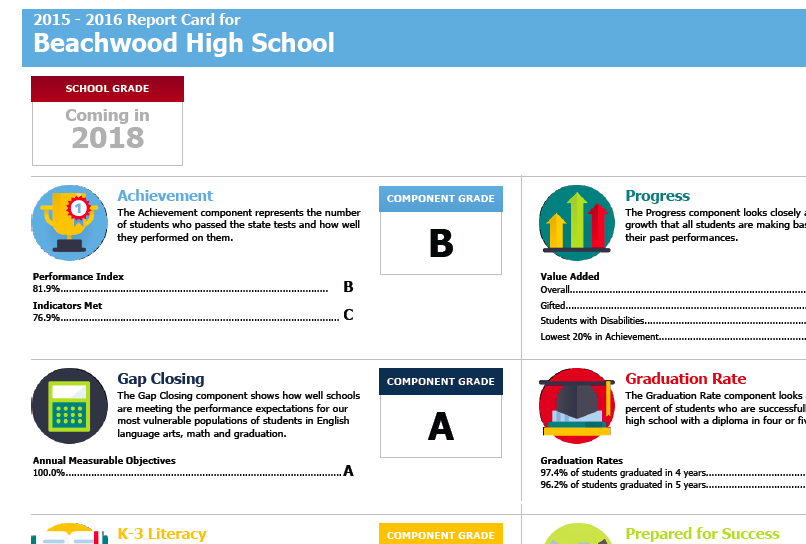

On the 2015-16 school report card, BHS scored an A in Achievement and met indicators in all subjects except English II and geometry.

Nevertheless, the Beachwood school district fared far better than most and was ranked fifth in the state in an analysis by Cleveland.com.

As the state standards have risen, the stakes placed on test performance have risen as well. Beginning in 2018, students who do not surpass 18 combined points on the five end-of-course exams will not graduate from high school, and state law has mandated that teachers’ evaluations are tied to student test scores. School districts statewide are rated largely on state test results, which influences property values.

Critics have raised concern about the number of tests that students are required to take, the fairness of the tests and the ability of teachers to prepare students for tests that seem to change from year-to-year. Some of the main concerns are investigated below.

Can End-of-Year Assessments Fairly Assess Student Learning?

While state and federal government policies have increased the use of standardized tests, many professional educators are skeptical of their value.

Social studies teacher John Perse argues that the state tests do not properly reflect what a student knows about history because of the limited number of questions covering an immense amount of history.

“I’m not opposed to a test at the end of the year, but I am opposed to a test that is arbitrary…,” Perse said. “There are only 18 to 24 questions on the standardized test, so how is the test an accurate reflection of the standards?”

“A test only measures knowledge and performance at a single point in time. Every student knows much more than can be assessed,” Ohio School Boards Association Director of Legislative Services Damon Asbury wrote in an email.

Do Teachers Know What Material Will be Covered on the Tests?

Some feel that the state does not give enough information about the layout and structure of the AIR tests, which are only in their second year.

“Even if the content of a test is not a surprise, the format in which students are supposed to deliver their responses can make a big difference as well,” Superintendent Dr. Bob Hardis said.

LoGalbo explained what information teachers get about the state standards and state tests.

“Teachers get what’s called a test blueprint,” LoGalbo said. “The blueprint breaks down what standards are going to be covered and taught, how many points the tests are worth, and the structure of the tests.”

Xu added that the blueprint states what general skills will be required and how the items are distributed. The ODE notifies principals and superintendents when the blueprints are published online.

AIR Ohio Project Team member Beth Flint added that the blueprint is available on the ODE web site and is available to students and teachers. However, it is more useful for teachers to use the blueprint when planning their curriculum.

Flint explained that there are practice tests online, which help students become familiar with the structure.

English teacher Casey Matthews feels that the samples provided by ODE are sufficient.

“We actually do get quite a bit,” she said. “There are practice sites that the ODE gives us to look at, and we know what the tests are going to look like.”

Do Students Have Trouble Navigating Computer-Based Assessments?

In the 2014-15 school year, when PARCC assessments were offered both on paper and online, school districts that chose to give the tests on paper performed better than those that gave the tests online.

Some argue that the challenge of navigating the online testing system seems to have been a factor that influenced scores.

However, Xu stated that the ODE’s evidence showed otherwise.

“There is no consistent evidence,” Xu stated. “Our data shows that there’s a little…difference, but it’s not consistent. One test may be better on paper and the other may be better online, a little bit here and a little bit there but not consistent.”

President of Ohio Federation of Teachers Melissa Cropper thinks that some students may have trouble using the technology.

“I think there are still concerns about technical skills, especially in lower grades,” Cropper said.

Freshman Lexi Glova, who took the ELA AIR test this April, added that different tests have different features. Online features include drag and drop options in which students must click on answer choices and drag them to a correct box, and fill-in options in which students type their answers in a word box.

“[It was] interesting because in the English [test] there was no use of… drag and drop or fill in [options]… [but] they used that in [the] science [test] a lot. It was used a little bit in math but not at all in language arts.”

Does AIR Use Computers to Grade Student Writing?

Flint stated that handwritten responses are human graded. However, whether or not a computer grades online extended response questions varies from state to state.

Since Ohio’s AIR tests are now only offered online, the state is able to use computerized grading of student writing, which saves a significant amount of money.

Machine grading software such as ETS’s e-rater is now able to assess hand-written student essays for writing issues agreement errors, spelling, usage and sentence structure.

Xu explained that on last year’s AIR exams, extended response questions were computer-graded, and 20% of the answers were then double-checked by a human. This year, all extended response questions were human-graded. However, the ODE plans to increase the amount of computer-grading in the future.

In order for computers to grade constructed responses, the ODE looks at a variety of answers from students, and the responses are matched with point values. These responses are then given to a computer, and the computer can use them to grade real test questions.

The National Council of Teachers of English, among others, has come out with a statement against computerized assessment of student writing, arguing that such assessment encourages formulaic approaches to writing, fails to recognize higher levels of analysis and discourages creativity.

Others have questioned validity of machine scoring. Les Perelman, an expert on machine scoring now retired from MIT, created the Babel Generator, a software program that creates nonsensical essays that can nevertheless receive high scores from electronic grading machines.

Do Teachers Receive Enough Information to Improve Instruction?

English teacher Casey Matthews explained that AIR does not release questions after the exams, or provide information to teachers about which specific content standards students are struggling with.

At one time, the ODE did release test items and answers to the OGTs and provided a breakdown of how each student answered each question.

“We could see every single answer and every single student who missed every single question; that’s valuable data,” Matthews said.

Xu argued that receiving specific test questions wouldn’t be very beneficial.

“We look at the content areas…so that way you know which areas you’re [strong in]…[which is] more valuable than how you did on a specific test question,” he said.

Xu added that the ODE does currently release a small percentage of the items on the test for the current year. However, these items went through multiple committees before appearing on a test, which is expensive.

Thus, Xu stated that it would be too expensive to release all of the items to the public, so some of the items are kept for future use.

Matthews would prefer to get more information regarding the questions that students missed.

“All I get now are last year’s scores,” Matthews said. “But I can’t see what a student has missed…so as a teacher I find that incredibly frustrating because I don’t know what I’m doing really well, and I don’t know where I need to improve.”

Hardis is hopeful that the AIR tests will provide more information in the future.

“I do think in the past, there was more access than there is now,” he said. “That said, we’re in the initial years of these AIR tests, and so perhaps over time, like with the OGT and OAAs, we will have more access to them, and it will lower that anxiety level.”

Students, however, are provided with detail regarding how they performed in sub-categories within each test. In addition, Xu explained that the ODE gives the district a data file and score labels, which are not for student use. The labels show how each student performed in four or five content areas within a test, such as reading and writing on an English test.

However, Xu added that the school district determines what is done with the labels.

“We do provide a [label],” Xu said. “The data itself is available to the district…It depends on the district…whether they want to show the teachers…..we give them a choice.”

However, multiple BHS teachers have stated that they have not seen this information, but would like to see it.

LoGalbo explained that the labels are provided to the schools through the guidance office.

“The labels are placed in the student files,” LoGalbo wrote in an email. “Teachers may look up a student’s testing label in the guidance office. I also provide the principals with a spreadsheet with all the student scores and the state provides above, at/near, below grade level ratings for broad groups of standards such as Information Text, Literary Text and Writing for the ELA exams. The principals share this information with the teachers.”

Flint explained that an online version of test scores is sent to the district about 45 days after the exam, but student do not see these scores. Students see these scores in a printed version that is released about one month later.

LoGalbo added that the AIR test results are returned more quickly than previous tests were.

“Obtaining timely feedback is critical. You can’t make changes for something…that [is] a year out of date,” Hardis said. “This past year we received data sooner – we received it over the summer…”

Is the Content on AIR Tests Grade-Level Appropriate?

Last year the state had to lower minimum proficiency on math assessments after only 24% of students performed at a proficient level.

“Even for the honors class, I think some of the content may have been hard to understand,” Glova said regarding this year’s test. “I guess it’s doable — you can kind of figure it out.”

For example, practice test items intended for freshmen in English I includes a passage from Shakespeare’s King Lear, which is taught to seniors in AP Literature. Last year’s English I practice questions included a passage from Thoreau, which is taught to juniors in American literature.

LoGalbo explained that AIR is intended to be more rigorous than previous state tests.

Flint added that there is an extensive process to determine what material will be on the state tests. Test questions go through multiple committees and are reviewed by experts to ensure fairness and address bias.

AIR also administers “field tests,” to students to determine what material will be on future exams. Members of AIR then use these student samples to create a scoring guide and equate to point values for extended response questions.

“Overall, The Ohio Federation of Teachers would like to see the state create a culture where students are engaged and learning for the sake of learning,” Cropper concluded.

Value of the Tests

“The overall purpose is to make sure each students [learns] the Ohio academic content standards,” Xu said.

Asbury added that testing should not be over-emphasized.

“Teachers do the best preparation in all time leading up to the test by ensuring that they have covered the proper content…[and] monitoring student performance…[Teachers should] encourage the students to be calm and not to over-emphasize the testing process,” he wrote in an email.

Overall, Matthews thinks that the state standardized are helpful to some extent.

“I understand the purpose of standardized tests…I do think that to some extent standardized tests can measure what a student does know…[and] I don’t mind using a standardized test as a benchmark of how I am as a teacher.”

Prerna Mukherjee has been writing for The Beachcomber since the fall of 2016. She covers a variety of school and community events. In her free time, Prerna...